Data Scientist / Software Architect

Introduction

I've been a data analyst and professional software engineer for over 20 years. I do full life-cycle development from architecture, design, implementation, to operations. I specialize in systems for aggregating and analyzing complex data sets. My education background is physics and data science with a focus on orbit dynamics. I construct physics-based models and simulations as well as perform statistical analysis of the results. I'm very good at rapid prototyping and data engineering to support extract, transform, and load (ETL) for numeric data and text in both structured and unstructured formats. In addition to basic statistical analysis, I have experience coding data science algorithms from scratch including clustering, regression, genetic algorithms, and principal component analysis.

My recent internal R&D work has focused on running LLMs locally in air-gapped, secure environments. I've built several productivity-enhancing applications that take advantage of LLMs and their remarkable capabilities with machine translation, entity extraction, and used them to build RAG and KAG systems. By focusing on local-first models and infrastructure I have provided secure, privacy-enhanced solutions for work with important data.

Feel free to visit my GitHub page 🔗.

Showcase

nograph 🔀

This is a personal project based on the idea of building graph databases on top of NoSQL backends. I have implemented a very light-weight engine for doing small-scale (up-to several million nodes and edges) graph databases that can run on personal hardware. For more information check out the nograph 🔗 homepage or the GitHub repository 🔗.

HEALPix 🌐

HEALPix stands for Hierarchical Equal Area iso Latitude Pixelation of the sphere. The pixelation (or pixelization) begins with 12 base pixels in three bands: two polar and one equatorial. Each base pixel can be sub-divided into 4 equal area sub pixels recursively.

SGP4 🛰

If you operate a satellite or are involved in space situational awareness (SSA) you know about two line element sets (TLEs). This is the most common source of data for the orbits of artificial space objects in near earth space. Despite the limitations of the data format (limited precision, no covariance), the fact that it is freely provided by the US Government and for almost all objects makes working with this data incredibly useful. The orbit dynamics theory, and related computer source code, needed to work with data in this format is known as Simplified General Perturbations 4 (SGP4). David Vallado, T.S. Kelso, and others at CSSI have worked very hard to provide modernized software implementations (with bug fixes and amazing test cases) for SGP4. To demonstrate my familiarity with this propagator and ability to work in multiple computer languages I ported their code from C to several other languages. The list of languages is up to: C, C++, C#, Fortran 90, Java, Javascript, Matlab/Octave, Libre/Open Office BASIC, Python 2/3, R, and Ruby. I have a repository on GitHub if you want to get the code 🔗.

SGP4-XP 🛰

In late 2020, the USSF released a new orbit propagator that works with TLEs called SGP4-XP. I presented a paper at AMOS 2021 about how to use SGP4-XP. I've written a set of utilities and web services to help the community adopt the new propagator. They are available on GitHub at odutils and odutils-web.

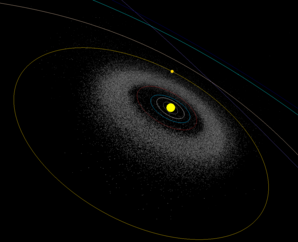

Solar System 🪐

I'm able to make custom 3D displays of data. Here is a simple visualization of the solar system. It starts with the sun and planets. It traces their orbits as well. Next I add in 38,000 near-earth asteroids, and finish with the first 100,000 asteroids from the MPC database. The positions in the heliocentric coordinate system are accurate for the epoch May 5, 2025.

Feel free to scroll, zoom, rotate. Different layers can be turned on and off with the checkboxes in the upper left.

CesiumJS 🌎

AGI's open source 3D globe and in-browser GIS framework is an amazing tool. I'm not yet an expert, but I hope more projects adopt it for building intuitive displays of geospatial and time-based data. It is well suited for displaying data in HEALPix bins. Also, using the javascript version of SGP4 I ported, you can fly satellites in the 3D environment offered by Cesium.

Research ⚛

Orbit Dynamics ☉ ☄

All three of my academic degrees have included a research project related to orbit dynamics. For my undergraduate degree I studied the binary pulsar PSR 1913+16. In addition to simulating the orbits of hypothetical exoplanets in this binary system, my force model also had to account for General Relativity. For my master's research project I implemented a symplectic integrator to study resonances in the asteroid belt. These resonances, primarily with the orbit of Jupiter, will cause asteroid orbits at certain locations to become elliptical over time eventually leading to their ejection from the belt. This is the primary cause of the Kirkwood gaps. For my PhD, I studied the orbits of interacting galaxies. I built a tool used by thousands of Citizen Scientist volunteers from the Galaxy Zoo project. We were able to determine the relative orbits of galaxies by matching simulations to current images of their post interaction morphologies.

John Wallin's restricted three-body code is very convenient for doing parameter studies of the orbits of interacting galaxies. By setting the origin of the coordinate system at the center of one of the galaxies, it greatly simplifies the task of determining the proper viewing angles for reconstructing merger morphology. His code was originally written in FORTRAN. He later added a new potential to better simulate dark matter halos. Both potentials were ported by me from FORTRAN to Java. I was then able to port it to javascript. You can run restricted three-body simulations of interacting galaxies in your browser. Click the link to re-run the best fit models from the Galaxy Zoo: Mergers project JSPAM in the browser 🔗.

Machine Learning with Computer Vision 🤖 👁

The research group I was part of during my PhD research was attempting to use computer vision and machine learning to automatically rate simulation outputs to determine if a match had been found for specific systems of interacting galaxies. Using the current best available fitness function in the literature, we were able to easily identify exact or nearly exact matches. However the function had a very, very narrow convergence radius and even genetic algorithms failed to reliably optimize the simulation parameters.

To overcome this, our group manually tried many different feature extraction methods in the OpenCV library to try to improve the fitness function but were ultimately unsuccessful.

The Galaxy Zoo: Mergers project involved the help of almost 10000 citizen scientist volunteers who reviewed millions of simulations. Using their ratings we were able to score simulation output to establish a smoother, albeit empirical, fitness function. With each simulation output having been assigned a rating we were able to attempt the machine learning problem again as a supervised learning exercise.

We used Lior Shamir's WNDCHRM software 🔗 to attempt to classify the simulation results into 4 different classes going from bad to good fit. The WNDCHRM software generates almost 3000 features for each image and uses them to classify the data. In addition to classifying the data, the software computes a Fisher score to determine which features offer the most information gain when separating the input images into classes. Using the Fisher scores we were able to identify a small number of the 1000s of total features that were used as the basis of a new fitness function. The revised function was able to smooth out the fitness function and increase the convergence radius for our genetic algorithm.

Simple Regression 📈

One of my hobbies is sports analytics. A lot of useful analysis can be done with simple regression analysis, either linear or logisitic. With all machine learning projects it is important to have a baseline model for comparison. Very often, simple regression can explain much of the variance. This establishes an important benchmark for comparing model results from more complicated algorithms and extensive feature sets. The complexity of an advanced model needs to be justified by an improvement over simple results before trying to implement in production.

- Logistic regression for field goal percentage

- Linear and Logistic regression for NCAA basketball

Professional Experience 💼

Software Development 💻

Almost all of my clients require non-disclosure agreements in order for me to access their proprietary data. In compliance with those agreements I will discuss projects only in general terms with a focus on the skills that I applied to provide solutions in each case.

- Delivered code for production in the following languages: Java, javascript, C/C++, python, ruby, fortran, matlab

Cloud Architectures ☁

I've worked with several different cloud providers from design stage through production.

- Amazon Web Services (AWS)

- I ported a legacy web server/RDBMS combo to AWS making use of EC2, RDS, ELB, S3, and more. I continue to provide O&M including managing snapshots and AMIs.

- Developed a desktop utility using custom PKI authentication that shares files via S3.

- My PhD research projected was hosted on AWS. We used SQS, EC2, ELB, and RDS.

- I used AWS to host dozens of EC2 instances performing orbit determination calculations for a recent research paper.

- Google Cloud

- I ported a small business website to be hosted out of a Google bucket, saving them over half of their hosting costs.

- Microsoft Azure

- I architected a complete data science pipeline to be hosted on Azure. From raw data handling, to simulations, to crowdsourcing, to final data analysis, the pipeline would have been an end-to-end solution if the project had moved forward.

Information System Security 🔒

Most of my clients have required custom authentication and authorization frameworks. Over my career I have evolved these capabilities from simple username and passwords stored in a database to mutual PKI authentication with client certs and custom LDAP directories. In addition to building the security layer in the software, I have extensive experience documenting security controls. I have prepared documentation that supported multiple software systems through their entire security assessment and authorization process (A&A) based on US federal government standards. The most recent standards for security controls that I have worked with is an extension of NIST 800-53.

- Implemented role-based access control for J2EE containers and custom ThreadLocal implementations.

- Custom authentication and Realms for Tomcat, JBoss, Jetty, Apache HTTP, Spring Security, and JAAS plugins.

- Experience with username/password stored in DB, LDAP, Kerberos, and custom PKI backends.

- Experience with credential management ranging from local encrypted files, java keystores, Microsoft cryptographic store, and AWS.

Teaching / Training 📚

- I was a laboratory instructor at George Mason University for 8 years. I taught one to two lab sections a semester of an Introduction to Astronomy course.

- For my PhD research project I created a detailed online tutorial and training videos to teach citizen science volunteers how to operate the browser-based simulation of interacting galaxies I had coded.

- For many of my software customers I've made user guides and tutorials as well as conducted small group demonstrations and one-on-one training. I also implemented an automated tutorial framework for a desktop application that would actually control the user's mouse for each step of the tutorial.

Education 🎓

Publications 📄

I have had the honor of working with many talented researchers. Where possible we have published our results and made our research software available as open source.

- Holincheck, A., & Cathell, J. (2022). Single-pass, Single-station, Doppler-only Initial Orbit Determination. AAS 2022 🔗

- Holincheck, A., & Cathell, J. (2021). Improved Orbital Predictions using Pseudo Observations - Maximizing the Utility of SGP4-XP. AMOS 2021 🔗

- Cathell, J., & Holincheck, A. (2021). Simplified Conjunction Analysis using a Graph Database for Identifying High Risk Objects. AMOS 2021 🔗

- Wallin, J. F., Holincheck, A. J., & Harvey, A. (2016). JSPAM: A restricted three-body code for simulating interacting galaxies. Astronomy and computing, 16, 26-33. ADS 🔗 arXiv 🔗

- Holincheck, A. J., Wallin, J. F., Borne, K., Fortson, L., Lintott, C., Smith, A. M., ... & Parrish, M. (2016). Galaxy Zoo: Mergers–Dynamical models of interacting galaxies. Monthly Notices of the Royal Astronomical Society, 459(1), 720-745. ADS 🔗 arXiv 🔗

- Wallin, J. F., Holincheck, A., & Harvey, A. (2015). JSPAM: Interacting galaxies modeller. Astrophysics Source Code Library. ADS 🔗

- Holincheck, A. (2014, January). A Pipeline for Constructing A Catalog of Multi-Method Models of Interacting Galaxies. In Bulletin of the American Astronomical Society (Vol. 223, p. 324). ADS 🔗

- Holincheck, A. (2013). A Pipeline for constructing a catalog of multi-method models of interacting galaxies (Doctoral dissertation). GMU 🔗

- Shamir, L., Holincheck, A., & Wallin, J. (2013). Automatic quantitative morphological analysis of interacting galaxies. Astronomy and Computing, 2, 67-73. ADS 🔗 arXiv 🔗

- Wallin, J., Holincheck, A., Borne, K., Lintott, C., Smith, A., Bamford, S., & Fortson, L. (2010, June). Tasking citizen scientists from Galaxy Zoo to model galaxy collisions. In Galaxy Wars: Stellar Populations and Star Formation in Interacting Galaxies (Vol. 423, p. 217). ADS 🔗

- Holincheck, A., Wallin, J., Borne, K., Lintott, C., Smith, A., Bamford, S., & Fortson, L. (2010, June). Tasking Citizen Scientists from Galaxy Zoo to Model Galaxy Collisions: Preliminary Results, Interface, Analysis. In Galaxy Wars: Stellar Populations and Star Formation in Interacting Galaxies (Vol. 423, p. 223). ADS 🔗

- Holincheck, A., Wallin, J., Borne, K., Lintott, C. J., & Smith, A. (2010, January). Building a catalog of dynamical properties of interacting galaxies in SDSS with the aid of citizen scientists. In Bulletin of the American Astronomical Society (Vol. 42, p. 383). ADS 🔗

- Holincheck, A. J. (1998). An investigation of the orbit of the binary pulsar PSR 1913+16.